Using Machine Learning to Detect Eating Activity in Electromyography Data

While enrolled at Arizona State University, one of our class projects was to utilize raw Electromyography data from the dominant hand of a number of users and detect at what points those users were eating versus doing something else.

While enrolled at Arizona State University, one of the class projects for CSE572 Data Mining was to utilize raw Electromyography data and gyro data to detect eating activity in the dominant arm of a number of subjects. The data was provided in raw CSV format and provided with a "truth" table of timestamps that was paired with the data to train machine learning algorithms to detect when users are eating versus not eating. The resulting machines were measured for accuracy and other metrics and compared. The language used was Python combined with the NumPy, Pandas, and SciPy libraries.

This project was used in my graduate portfolio and therefore required a 3 page project report. The details of the methodologies and lessons learned are in the report. Below is the abstract of that report:

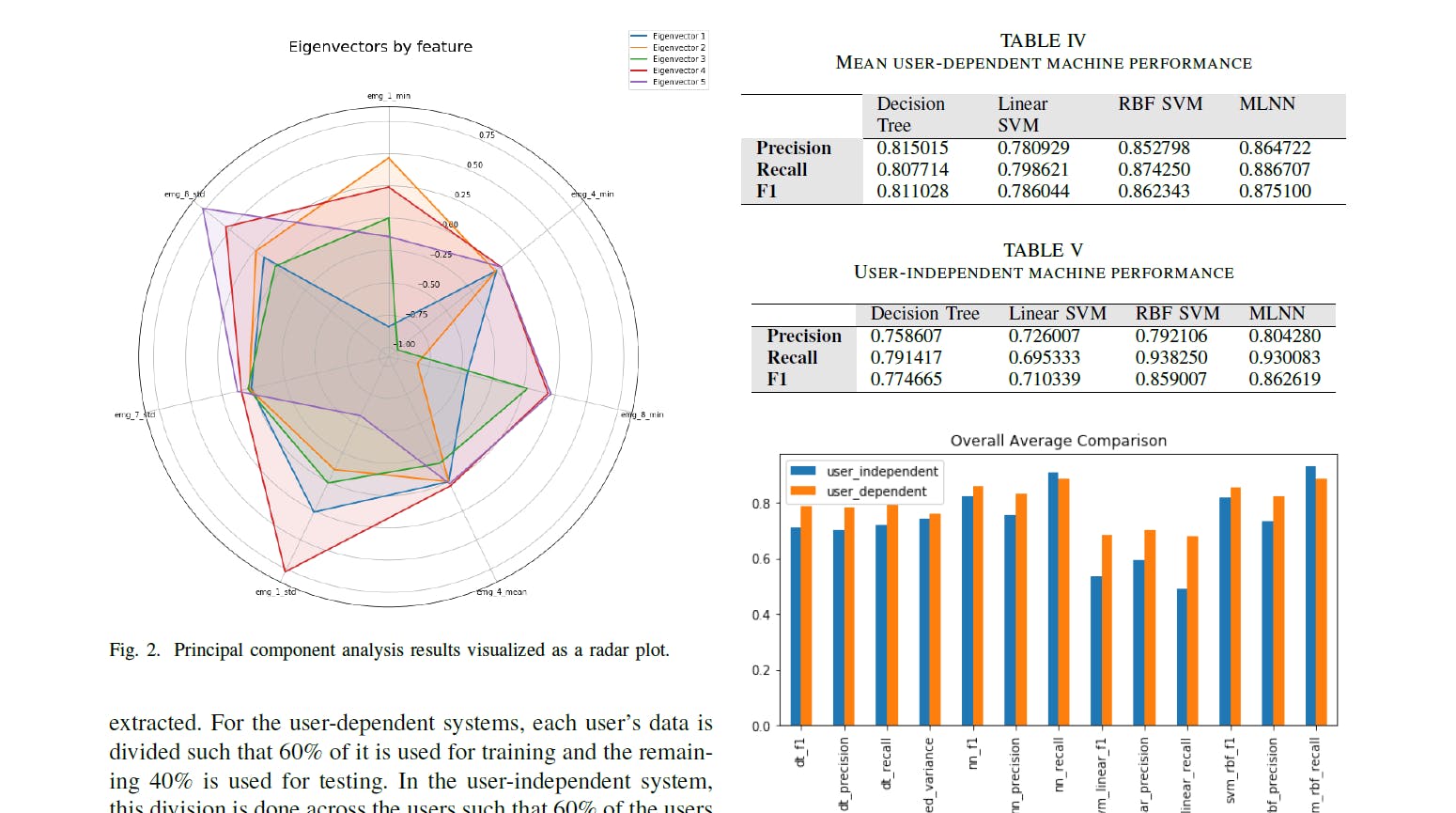

A collection of Electromyography [1] data from the dominate arms of thirty users is classified as "eating" or "not eating". This data is reduced in dimensionality, analyzed for principal components, and used to train four different machine learning algorithms that can predict the classification of test data that omits the classification label. The four machines are trained to predict eat actions from a user-dependent and user-independent perspective and their performance is compared across precision, recall, and F1 scores.

For academic integrity reasons, I will not be providing the source code for download here. This project was not unique to me and was assigned to other students in the class, and therefore presumed to be assigned to proceeding iterations of the class. It can be made available upon request by an employer.